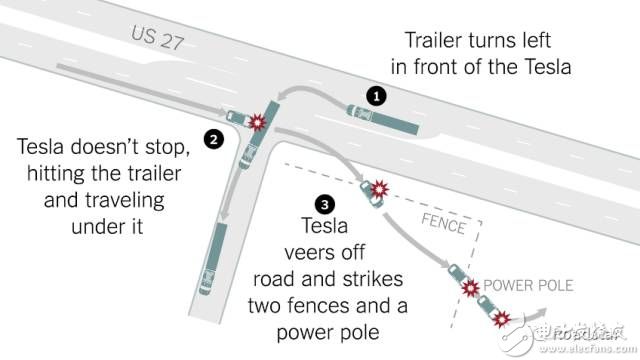

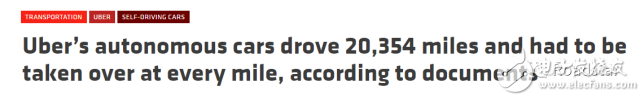

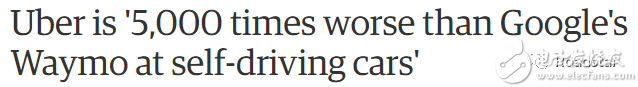

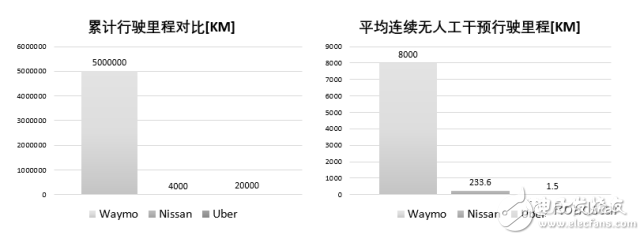

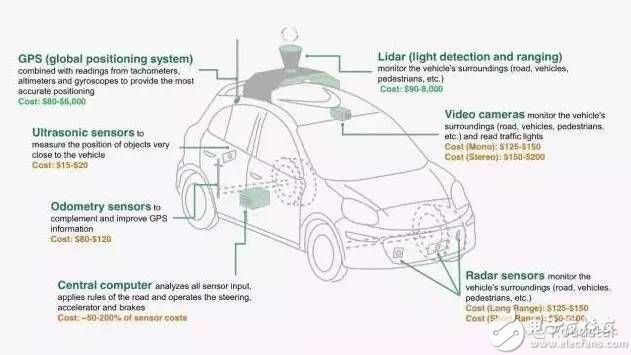

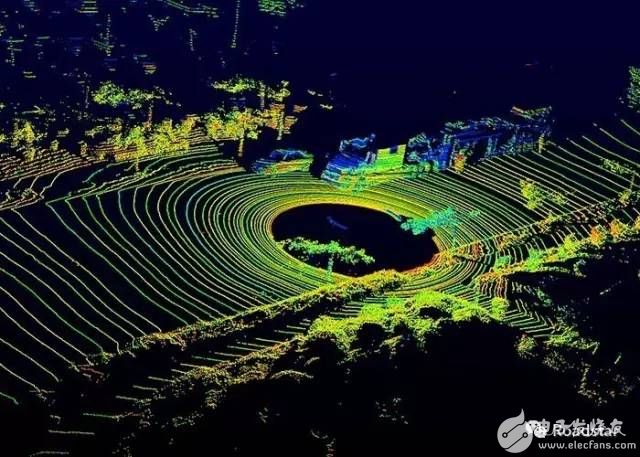

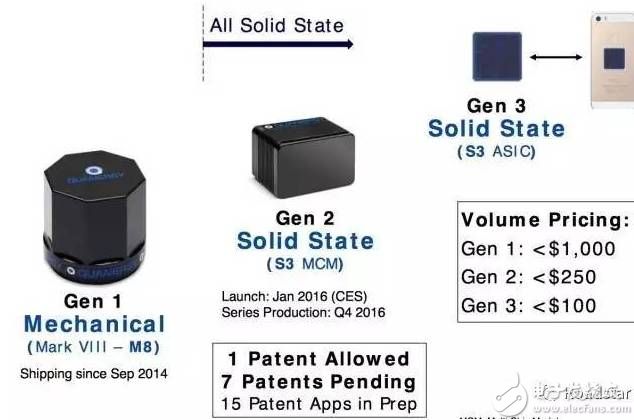

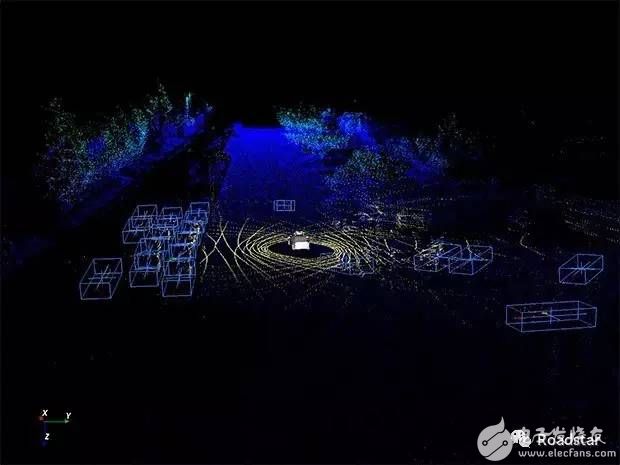

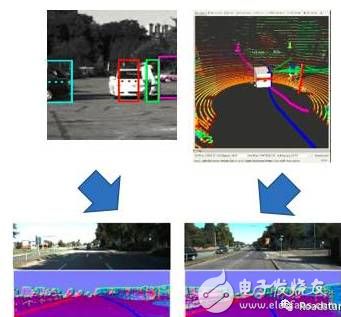

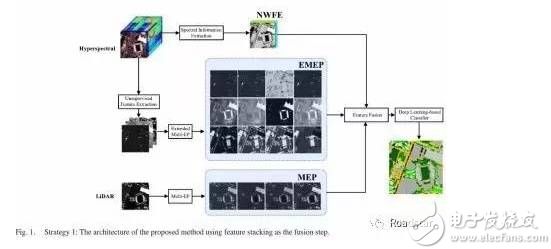

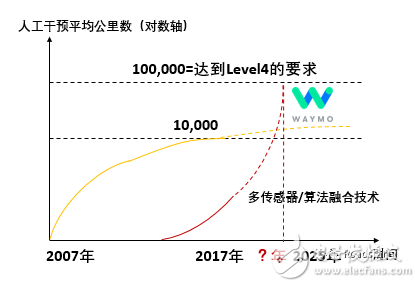

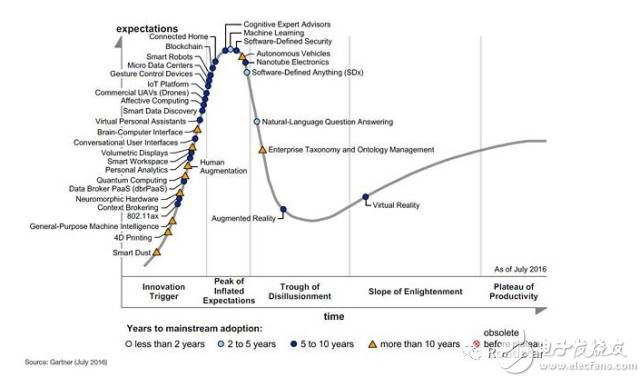

The current unmanned craze originated in 2005, the DARPA Grand Challenge organized by the US Department of Defense. In the first year of 2004, no players ran out, but in the wilderness competition in 2005, many teams successfully ran, and Stanford University and Carnegie Mellon University won the first and second place. 1. Analysis of the status quo of the driverless industry 1.1 The origin: DARPA The current unmanned craze originated in 2005, the DARPA Grand Challenge organized by the US Department of Defense. In the first year of 2004, no players ran out, but in the wilderness competition in 2005, many teams successfully ran, and Stanford University and Carnegie Mellon University won the first and second place. In 2007, the DARPA Urban Challenge put the challenge in the city's road conditions. This time, Carnegie Mellon University, Stanford University and Virginia Tech University won the first, second and third respectively. (Figure 01 Two very critical DARPA Challenges, driving unmanned research in academia) Through the US Department of Defense competition, the possibility of driverless technology was proved to the world. 1.2 Google continues DARPA's technology and has been continuously developing since 2009. Google saw the future of driverless technology in DARPA. In 2009, Sebastian, the head of the Stanford AI Lab and the head of the Stanford University team in DARPA, recruited Google and continued his work with his original team. Research in the field of driving. Google X was formally established in January 2010. (Fig. 02 Sebastian, head of the AI ​​Lab at Stanford University) (Fig. 03 Google's early drones) From 2009 to June 2016, Google’s unmanned vehicles totaled 2,777,585 kilometers. In the month of August 2016, the unmanned vehicle traveled 202,777 kilometers. As of now, Google's unmanned vehicles have accumulated 5 million kilometers. The latest technical strength can reach an average of 8,000 km of manual intervention. In December 2016, Google officially split the driverless business in Google X and established the independent company Waymo. (Fig. 04 Driverless independence from GoogleX, established subsidiary Waymo) Thanks to Google's push for driverless technology, everyone in Silicon Valley can see that cutting-edge technology has quickly gained popularity. At present, Google's driverless technology is recognized as the highest level in the world. 1.3 Tesla's Exploration - Radical Pioneers Since the end of 2014, Tesla has offered users an optional “assisted driving packageâ€. The ADAS technology provided by Mobileye is used. Subsequently, due to differences in technical routes, Tesla terminated its cooperation with Mobileye in July 2016. At present, Tesla's technical route relies on 8 surround cameras, 1 millimeter wave radar and 12 ultrasonic sensors. Tesla's ambitions are growing, claiming to be completely unmanned by the end of 2017, and to be fully operational in 2019. (Fig. 05 Tesla's Autopilot - mainly based on the camera for identification) (Fig. 06 Tesla's then partner, Mobileye's equipment) (Figure 07 professional ADEA business Mobileye) (Fig. 08 Tesla's multi-sensor solution) With the simplest ADAS system, quite high precision can be achieved under good illumination conditions, but it is generally considered that its safety is not up to standard. Tesla's argument is that Tesla's current car has accumulated 1.3 billion miles under the “assisted driving modeâ€, and the current accident probability is quite low, at least one-half of the human equivalent. So the current autonomous driving system can achieve twice the performance of humans. In reality, in May 2016, a car owner in Tesla turned on the auto-driving mode, watching videos on the car and crashing into the trailer. According to the ex post investigation, Tesla was sentenced to disclaimer due to a disclaimer requesting the owner to be responsible for driving and not being able to hand it over to the system. (Figure 9 Tesla car in a serious accident in the automatic driving mode) (Figure 10 Car damaged after a serious accident) As a result, Tesla claims that its technology can achieve “completely unmanned†above Level 4, and in reality, in order to avoid liability, users are only required to use it as a driver. Although Tesla's technical route is more radical, but because of its wide consumer base, attracting strong attention from the capital market, it can be said that the unmanned craze has started. Because Google's car is quietly improving, but knows that there are fewer people on the road, and Tesla's car has a large number of car owners. Through the intuitive feeling, the owner feels that the light is the ADAS system is not far from the imagination of the driverless. After all, 99% Security, and 99.9% security, the difference is not obvious to consumers. (Figure 11 Tesla's store, with the feeling of luxury) On the other hand, Tesla is extremely good at making topics and has also played a role in fueling the situation. (Figure 12 Tesla related news continues) 1.4 Uber and Apple's exploration In 2015, Uber CEO officially announced the use of driverless technology to provide services, recruiting some Carnegie Mellon researchers, using the multi-sensor fusion technology of LiDAR + camera. In August 2016, Uber acquired Otto, a driverless company. In September of the same year, Uber officially provided a taxi service based on driverless systems in Pittsburgh. In December of the same year, the same service was provided in San Francisco. So far, Uber's technology has been slightly inferior, and the actual results have not met Uber's expectations. At present, Uber's fleet has 43 vehicles, driving 20,000 miles per week in unmanned mode, with an average of 0.8 miles of manual intervention. (Figure 13 Uber's driverless car rental) (Figure 14 Uber's driverless technology has been greatly questioned) At the end of 2014, Apple officially launched an ambitious car-making program called “Project Titan†– a completely unmanned electric vehicle. According to insiders, Apple is a technology prototype developed in cooperation with a robot company, using the latest multi-sensor fusion technology. The robot company is a company of Virginia Tech spin off and was once a team and partner of Virginia Tech to participate in the DARPA Challenge. At first Apple wanted to build an electric car that was analogous to Tesla, but the 16-year strategy changed and Apple wanted to build a "all-wheelless" electric car. There will be no steering wheel on this car and it is expected to be put on the market as early as 2020. In June 2017, Apple CEO acknowledged Apple's interest in the driverless field, but denied the idea of ​​building a car, but rather researching software for driverless technology. (Figure 15 Apple's Titan plan) 1.5 Unmanned technical indicators comparison – how far is the distance to achieve Level 4? Despite differences in the choice of indicators, companies applying for driverless licenses are required to report their unmanned driving conditions in accordance with California regulations. The most important indicator is the cumulative number of unmanned miles, and the average number of consecutive non-interventional mileage. (Figure 16 Waymo, car factory representative Nissan and Uber indicators comparison) From the conclusion, Google's technology is still ahead of other comparable companies, with a cumulative total of 5 million kilometers, and the latest technology can achieve an average of 8,000 kilometers of manual intervention. As a relatively advanced Nissan in the main engine plant, it is about an average of 230 km of manual intervention. The level of Uber is probably still at an average of 1.5 km for one manual intervention. It can be seen that the gap is still large. Domestic Baidu, according to the news, the current level is equivalent to Uber. According to the industry's general opinion, if the safety of unmanned vehicles can reach 10 times that of humans, it can completely replace people. Then perhaps the technical goal of autonomous driving needs to be fully realized, at the level of one manual intervention of 100,000 kilometers. It is precisely because Google's level is already very high, so that the capital market sees the possibility of achieving sufficient security in the short term (3-5 years), and now all companies are struggling to sprint the last five kilometers of technology. 1.6 The booming unmanned start-ups – the horn that rushed to victory Since 2015, there have been a number of start-ups that are unmanned. Here we have picked up some typical companies that are close to Level 4 (the definition of driverless and assisted driving see the analysis) for everyone to analyze. (Figure 17 Main Level 4 driverless startup based on online information collection) It can be seen that in 2015 and 2016, many start-up companies from large companies and universities began to enter the field. The development of the driverless system has shown a state of blooming. The Whampoa Military Academy with unmanned technology, Stanford University, Google, and domestic Baidu. According to the above analysis, these companies that research unmanned Level 4 technology are trying to use their respective technical solutions to achieve safety compliance and cost-controllable “unmanned last five kilometersâ€. Who can pick the final crown? We believe that analogous to the automotive industry's engine, battery management technology and other core modules, driverless technology should not present a unique situation. In the end, there should be big companies, and some startups can make products that meet performance and cost standards. 1.7 Driverless or assisted driving Unmanned from Level 0 to Level 5 according to SAE classification. There are many more detailed classification standards on the Internet, and we will not do detailed science. Here is a simple definition, and give you an example. (Figure 18: A description of the unmanned classification) By definition, Level 2 to Level 3 should be classified as "assisted driving". It is called assisted driving because it is the driver's full responsibility for driving at this level. The driverless system does not bear the consequences of all accidents. At present, the cost price of Level 2's ADAS system has dropped to less than 1,000 RMB, and the pre-installed price is also within a few thousand RMB. It can be regarded as a better commercialized automotive intelligent accessory. There are well-known Mobileye overseas, and there are many benchmarking companies doing ADAS systems in China that are trying to catch up. Level 2 technology can achieve comprehensive driving assistance, but because of the low security requirements, the effect is often determined according to the driver's subjective feelings. It can be said that the technical threshold is relatively low. At present, many OEMs are developing their own ADAS systems to replace Mobileye. Level 3 is a system that is based on Level 2 and adds more sensors. After improving the safety factor, it can completely take over the control system of the car under partial road conditions. For example, a car driving from the city to the highway, on the highway can achieve unmanned cruise control (Tesla already provides this feature). However, the problem is that because the road conditions for adaptation are relatively small, and the system still requires the human driver to take over within six seconds after the request is made, real human replacement cannot be achieved. For companies emerging in China, low-speed unmanned vehicles such as airport shuttle buses, park golf carts, etc., because of the very low speed (10 km / h), the safety requirements will be much lower. In general, we classify our technology into Level 2 to Level 3. Level 2, Level 3, although there are more visible applications, the technology they need and the Level 4 or above are still distinct. Perhaps unlike many people, it is not possible to gradually upgrade from Level 2 to Level 4. The main technology of Level 4 is to achieve complete driverlessness in urban environments – this requires a system with high security. The biggest feature of Level 4 is that it can achieve “completely unmanned driving†given a predetermined area. In this way, commercial alternatives to human drivers can be achieved, and traditional production relationships can be changed. The Level 2, Level 3 technology ultimately "helps" the human driver to drive the car better. Because Level 4 has huge technical challenges and correspondingly broader market space, Level 4 driverless technology is currently the focus of the market. 2. Analysis of the status quo of the driverless industry 2.1 List of vehicle sensors Sensors used in unmanned vehicles are broadly classified into the following categories: laser radar, camera, GPS (including differential GPS), IMU (inertial guidance), millimeter wave radar, ultrasonic radar, and so on. Although humans can achieve driving only through sight and hearing, robots lack a brain that can effectively identify scenes, and need to make up for this defect through a stronger "five senses". The sensor can be said to be the eyes and ears of a driverless car. (Figure 19 Case of on-board sensor) 2.2 LiDAR of the main sensor Among the different sensor categories, Lidar, which is derived from DARPA, is considered to be the most important and indispensable sensor for driverless driving. Compared to other sensors, his biggest advantage is that the measurement of space is very accurate and can guarantee a relatively high safety factor. (Figure 20 sensor advantages and disadvantages comparison) (Figure 21 Velodyne's 64-wire, 32-wire and 16-line LiDAR) (Figure 22 The world in LiDAR's eyes) The current global LiDAR supply is almost monopolized by Velodyne. Expensive, the cheapest 16-line 7,900 US dollars, the most expensive 64-line 80,000 US dollars. Mechanical laser radar can realize the accurate perception of the surrounding space environment through point cloud through high-speed rotation. Each driverless manufacturer needs to develop its own point cloud feature recognition technology. 2.3 Intense competition in LiDAR development At present, the more mature manufacturers in the world only have the Velodyne family from DARPA. But there are countless startups born, trying to challenge Velodyne's monopoly. LiDAR itself has a new technical direction, which is to abandon Velodyne's mechanical rotating design and phase-array technology to achieve solid-state laser radar. The solid state laser radar can only reach 16 lines. However, it is easier to mass-produce and the cost can be made lower. It is widely regarded as the ultimate solution for laser radar. The final cost can be reduced to $100, which is similar to a camera. (Figure 24 Solid LiDAR in Quanergy Development) (Fig. 25 Effect diagram of solid-state LiDAR in Lumina development) (Figure 26 Solid State Products and Prices in Quanergy Planning) 2.4 Is it only reliable if the camera is used? Where is the capability boundary of Deep Learning? This year, due to the progress of DNN (Deep Neural Network), which is deep learning technology, many new algorithms based on this are born. Many scholars say that relying solely on DNN technology can achieve human-like driving—depending on the visual information of the camera, achieving level 4 driverlessness. Its representative is Auto X, founded by Professor Xiao Jianxiong from the AI ​​field of Princeton University, and Drive.ai from the AI ​​Lab of Stanford University, the famous AI troika Mrs. Wu Enda. However, perhaps the discovery of relying solely on visual information and the inability of DNN to achieve Level 4 security, coupled with the limitations of a single camera sensor, both companies have now changed the original claim. AutoX says they are "Camera First" and LiDAR will be added after the future LiDAR price cut. Drive.ai says they are using the DNN algorithm to identify point clouds generated by LiDAR—not just on the camera. From our point of view, because LiDAR price reduction is an inevitable thing, because the price is high without LiDAR, reducing the overall security and reliability of the system may not be worth the candle. (Figure 27 AutoX car with multiple cameras) (Figure 28 AutoX's Car Camera Array) (Figure 29 Drive.ai's car, you can see an array of six 16-line LiDAR) (Fig. 30 The identification image under the LiDAR of Drive.ai, the object that DNN applies also extends to the point cloud data) 2.5 Level 4 Unmanned New Technology Architecture - Multi-sensor Fusion and DNN Algorithm Google started doing unmanned vehicles in 2009, based on the prototype of Stanford when participating in the DARPA competition. Due to the poor performance of the camera in 2009, Google relies heavily on LiDAR for future development. The algorithmic aspect of the corner case encountered by the LiDAR algorithm is continuously supplemented, that is, patched. And, to improve security, Google even built the world's only 128-line LiDAR. In other words, in Google's technology system, high-line LiDAR is an indispensable main sensor. In recent years, there have been three new trends. A. Simulation and replacement of high-line LiDAR for multiple low-line LiDAR Due to the high production requirements of high-line LiDAR, Velodyne and Google's production is limited, and the cost is very high, which leads to great suffering in the popularization of technical solutions using LiDAR. In recent years, many startups have found that by combining a large number of 16-line LiDARs, they can achieve results that are not inferior to a single high-line LiDAR. (Figure 31 Alternatives to 64-line LiDAR for multiple LiDAR arrays) B. Multi-sensor, especially algorithm fusion of LiDAR and camera Due to the development of computer vision and deep learning algorithms in recent years, the image recognition capability of the camera has a qualitative leap compared to around 2010. Based on Level 4 unmanned driving with LiDAR as the main sensor and Level 2 ADAS with a cheaper camera as the main sensor, there is a new attempt to fuse LiDAR and camera data. With the complementary advantages of the two sensors, LiDAR and camera, higher security can be achieved with lower performance sensors. (Figure 32 Schematic diagram of depth fusion of camera and LiDAR data) C. For LiDAR point cloud data, use the DNN algorithm In the age of Google, DNN has not been widely used. So Google's recognition of point clouds is similar to Computer Vision's algorithm-extraction feature. However, due to the recent demonstration of the effects of DNN, there are also some new attempts to use DNN to learn cloud data and identify objects. Although this effect has yet to be verified, we believe that this method will eventually surpass the traditional feature recognition algorithm after the data volume is large. (Figure 33 DNN application in LiDAR data) (Figure 34 Nvidia's DNN solution) 2.6 Is drone driving a bubble? For the driverless popularity, the most critical factors are performance and price. From the price point of view, the most expensive laser radar in the industry is expected to reduce the price to a level that will not be in the next five years. The key factor in the question now is whether drones can meet everyone's expectations and reach the maturity of technology in the foreseeable short term. In recent years, Google's technology has reached a level of 8,000 kilometers of manual intervention. This level is very close to achieving Level 4 for many people. Tesla, on the other hand, is radically convinced that the camera and ultrasonic radar, the millimeter wave radar solution, can already achieve twice the driving ability of people. Let us be conservative. Assuming a continuous manual intervention of 100,000 km is the basic requirement for Level 4 driverless. (Figure 35 Technology development forecast map, yellow line is Google's solution, red line represents new technology system) According to the new technology architecture, we believe that Level 4 driverless technology is expected to achieve sufficient security at some time before 2025. Whether or not drone is broken depends on the future technological progress represented by the dotted line in the figure. In addition, according to Gartner's famous Hype Cycle, driverlessness is already in the final stages of the bubble period. In their predictions, the popularity of driverless driving takes much longer than 10 years. Gartner is still relatively conservative about driverless technology. (Figure 36 Gartner's famous Hype Cycle diagram) In the end, whether drone driving will be a bubble, and the technical advancement in the next three years will uncover this puzzle. Our team is full of confidence in the future of driverless technology. 3. The uniqueness of the Chinese market 3.1 Technical challenges of Chinese roads The current world of driverless road tests, mainly in the Silicon Valley region. The road traffic situation in China is more complicated than in Silicon Valley or other developed countries. At present, the mainstream unmanned algorithms need to solve the problem of the corner case. Whether it is through the method of patching the continuous change algorithm or collecting data through DNN, it is necessary to actually contact a large number of corner cases. Therefore, translating the Silicon Valley algorithm into China will certainly fail. For example, Google's unmanned car that runs perfectly in the San Francisco Bay Area has a lot of problems when it comes to Texas, not to mention China. (Figure 37 Comparison of road conditions in China and the United States) Therefore, the Chinese market is in urgent need of a system developed for China's road conditions. Without large-scale road test data collection in China, it is impossible to achieve Level 4 unmanned driving in China. 3.2 Status of unmanned driving in China According to the plan of “Made in China 2025†launched by the Ministry of Industry and Information Technology, China’s goal of unmanned driving in 2020 and 2025 is as follows: In the technical roadmap of the key areas of "Made in China 2025", the intelligent networked vehicles are divided into four levels: DA, PA, HA, and FA. Among them, PA refers to partial autopilot, HA refers to highly automatic driving, and FA refers to completely autonomous driving. The driving rights are completely handed over to the vehicle. In 2020, the independent share of automotive informatization products will reach 50%, and the independent share of DA and PA will exceed 40%. The key technologies of sensors and controllers will be mastered, and the supply capacity will meet the needs of independent scale. The product quality will reach the international advanced level. Start smart transportation city construction, and the proportion of independent facilities is over 80%. In 2025, the independent share of automotive information products reached 60%, and the independent share of DA, PA, and HA reached more than 50%; independent smart trucks began to export on a large scale. Formulate the Chinese version of fully autonomous driving standards, based on multi-source information fusion, multi-network integration, using artificial intelligence, deep mining and automatic control technology, with intelligent environment and auxiliary facilities to achieve autonomous driving, can change travel mode, eliminate congestion, improve road utilization Rate, comprehensive energy consumption reduced by 10%, reduced emissions by 20%, reduced traffic accidents by 80%, and basically eliminated traffic death; FA intelligent equipment rate of 10%, independent system equipment rate of 40%. On the other hand, there are many enterprises and universities in China that have begun to independently develop Level 4 driverless systems. Typical such as Baidu, Volvo, National Defense Science and Technology and SAIC. Many have implemented highway experiments over longer distances and experiments in enclosed areas. (Fig. 38 Road test of driverless vehicles in some enterprises, universities and research institutes in China) 3.3 Construction of unmanned car towns with Chinese characteristics Driven by the government and developers, the construction boom of “unmanned car towns†is booming across China. Typical examples are Shenzhen and Fujian Zhangzhou. Shenzhen's unmanned car town (excerpted from news): Southern University of Science and Technology, University of Michigan, and Frontier Industry Fund signed a cooperation agreement in Shenzhen, announcing the joint construction of an unmanned demonstration base. The total investment of the project is 10 billion yuan. It will be located in Longgang District or Shenzhen Cooperation Zone in Shenzhen. The specific area is still under exploration and planning. This demonstration base is an unmanned town. According to Wang Lejing, co-founder of the Frontier Industry Fund, the town will bring together smart car-related technology research and development and innovation companies to build unmanned demonstration operating areas, residential, education, hospital and other infrastructure. Employees can go to work on foot, and investment institutions can negotiate a number of companies within a day. Wang Lejing wants to create a “Silicon Valley+Mcity†model related to the smart car industry (Mcity is the world's first test site dedicated to testing the potential of automotive networking and autonomous vehicles). According to the plan, the first batch of test vehicles will be tested at the base from the third quarter to the fourth quarter of next year. Unmanned car town in Zhangzhou, Fujian (excerpted from news): Zhangzhou’s “the world’s first urban-level driverless car social laboratory†is basically the same concept. It is said to be Mcity's exclusive partner in China, and has signed a strategic cooperation agreement with the Michigan government and Cupertino in Silicon Valley. It will be settled in the Economic and Technological Development Zone of Zhangzhou Investment Promotion Bureau (hereinafter referred to as “Zhangzhou China Merchants Development Zoneâ€). The project plans to construct three related progressive test scenarios, including a 600,000 square meter closed test site and a 2 million square meter industrial park experimental site (both an industrial cluster and a new experimental mode). The entire development zone (56 square kilometers) carried out large-scale experiments on the unmanned technical regulation method, forming a series of institutional frameworks related to the legal system, commercial insurance and technical standards related to driverless driving. The total investment area of ​​Zhangzhou China Merchants Development Zone is 56 square kilometers. It is a city-level physical space. The project will also set up a 10 billion industrial fund for the construction of laboratories and support for the enterprises. Aluminum Alloy Die Casting,Injection Mold,Alloy Die Casting,Glass Door Die Casting Dongguan Metalwork Technology Co., LTD. , https://www.dgdiecastpro.com