1 visual recognition system composition The visual recognition system of the dicing machine is a computer-based real-time image processing system. As shown in Figure 1: It consists of optical lighting system, CCD camera device, image processing software and so on. 2 Technical route selection In view of the current situation at home and abroad, when we set up the framework of our own visual recognition technology for dicing machines, the starting point is how to use existing mature resources and theoretical algorithms to build a set of efficiency and practicality based on the characteristics of the equipment itself. A set of visual algorithms, which in turn form a machine vision library for the fully automatic dicing machine.

Which is the most welcome kid laptop for entertainment and online learning? 10.1 inch laptop is the best choice. You can see netbook 10.1 inch with android os, 10.1 inch windows laptop, mini laptop 10.1 inch 2 in 1 windows, 10.1 inch 2 In 1 Laptop with android os. Of course, there are various matches of memory and storage, 2 32GB or 4 64GB. Our suggestion is that 10.1 inch android 32GB laptop, 10.1inch 32GB or 64GB Solid State Drive windows laptop. Except 10.1 inch Student Laptop , there are 11 Inch Laptop, 15.6 Inch Laptop, 14 Inch Laptop , also option here.

Besides, other advantages you can see on 10.1inch Budget Laptop For Students, for example, lightweight, competitive cost, portability, Android or Windows OS, rich slots, energy saving cpu, etc.

As a professional manufacturer, can provide free custom service, like mark client`s logo on laptop cover, opening system, inner color box, manual, boot. Produce as your special requirement on parameters, preinstall apps needed, etc. What you need to do is very simple, confirming PI, including price, delivery time, parameters, etc.

10.1 Inch Laptop,Netbook 10.1 Inch,10.1 Inch 2 In 1 Laptop,10.1 Inch Windows Laptop,Mini Laptop 10.1 Inch Henan Shuyi Electronics Co., Ltd. , https://www.shuyioemelectronics.com

The purpose of the recognition system is to achieve automatic alignment. Under the premise of the accuracy of the workbench, the high-precision image processing algorithm plays a decisive role in the accuracy of the visual auto-alignment system. The core part is the pattern recognition algorithm. At present, commonly used recognition methods include statistical pattern recognition method, feature extraction method, neural network recognition, and template matching method. The domestic start in this field is relatively late, and the research force is mainly concentrated in some colleges and universities, focusing on theoretical research, and the marketization effect is not obvious. This makes the development speed in the field of machine vision significantly behind the European and American countries.

Through a variety of methods, including cooperation with foreign machine vision companies, customized sets of visual identity systems, etc. according to specific functional module requirements. However, the problem encountered is that it must bear the expensive development costs and high profits of foreign companies, resulting in a straightening increase in equipment costs, and it is highly likely that our own technical secrets will be revealed in the process of cooperation. It is not feasible to test this path in practice. It is more appropriate to purchase secondary software development kits from foreign visual companies, and the technical difficulty is smaller. However, the software development kit is also not targeted, and the actual use process does not fully meet the requirements of the site. The cost of a single device is increased and problems cannot be solved. After continuous exploration and comparison of several common algorithms in the industry today, we finally decided to adopt the template geometric feature matching algorithm based on Open CV visual function library on the automatic dicing machine.

Open CV is an open source computer vision library from Intel. It is a cross-platform visual function library composed of middle and high-level APIs. It consists of a series of C functions and a small number of C++ classes, which implements many general algorithms in image processing and computer vision. This avoids repeated research on some mature low-level algorithms and saves a lot of time. More importantly, it is free for non-commercial and commercial use and does not put pressure on our equipment costs. The geometric feature matching of the template is a new visual positioning technology that appeared on the market in the late 1990s. It is understood that many famous semiconductor equipment manufacturers in the world, including Japan DISCO, Tokyo Precision, and US K&s, have adopted related technologies in their main equipment vision field. Different from the traditional gray level matching, the geometric feature matching ensures the object geometry and the object geometric features in the region of interest, and then searches for similarly shaped objects in the image. It does not depend on the special pixel gray scale, which guarantees in principle. It has some features superior to traditional visual positioning algorithms. The algorithm has been verified in the development of a fully automatic dicing machine. The application of this technology improves the visual recognition efficiency and automatic alignment ability of the automatic dicing machine, so that the object can be accurately positioned and the automatic alignment can be realized under the condition of changing the angle, size, brightness and the like of the workpiece. cut.

3 Identification system design

3.1 Design Process

The design structure of the visual recognition system is basically similar. The key lies in the selection of the recognition algorithm. The design structure of the visual recognition system of the dicing machine is shown in Figure 2:

In the application process of the algorithm, considering the actual situation of the dicing machine work site, in order to effectively extract the feature points of the pre-stored template image, we preprocessed the obtained scratched workpiece template image to extract the image. Geometric features, these pre-processing mainly include reducing and filtering out the clicks in the image, enhancing the geometric feature points to be matched in the image, and so on. Among them, filtering and segmentation are two important steps before extracting the geometric features of the pre-template image.

3.2 Filter design principle

In general, live beeps appear as high-frequency signals in images, so general filters achieve filtering by attenuating and eliminating high-frequency components in Fourier space. However, various structural details in the workpiece to be cut, such as edges and corners, also belong to high-frequency components. Therefore, how to maximize the retention of structural features in the image while filtering out the clicks is always image filtering. The main direction of the research.

Linear filters include moving average filters and Gaussian filters. The most commonly used nonlinear filters are median filters and SUSAN (Smallest Univalue Segment Assimila TIng Nucleus) filtering. Among them, SUSAN filtering can save other structural features of the object while filtering out the image hum. It can meet the requirements of the effect of smoothing the positioning template image in the automatic alignment system of the automatic dicing machine. The SUASN method is a general term for a class of image processing algorithms, including filtering, edge extraction, and corner extraction. The basic principles of all these algorithms are the same.

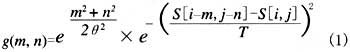

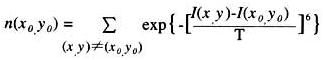

The SUSAN filter is essentially a weighted average mean filter, and the similarity test function is its weighting factor. Equation (1) defines a similarity test function that measures the degree of similarity between the pixel S[i,j] and its pixel S[im,jn] (m,n is the offset). It can be seen that the similarity measurement function not only compares the difference between the gray values ​​of S[im,jn] and S[i,j], but also considers the distance between S[im,jn] and S[i,j]. Impact.

Where: S[im,jn], S[i,j] is the gray value of the pixel, and T is the threshold for measuring the similarity of the gray value, and its value has little effect on the filtering result. Where: θ can be considered as the variance of the Gaussian smoothing filter, θ takes a larger value to obtain a better smoothing effect, and θ takes a smaller value to maintain the details in the image. After many experiments, we think that 4. O is more suitable.

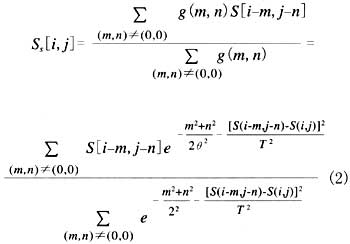

The filter function defined by the similarity measurement function is given by equation (2):

Where: S, [i, j] is the gray value after pixel filtering. It can be known from equation (2) that the weight with a large similarity is large, so the influence on the filtering result is large, and the effect is small. SUSAN filtering does not include the center point itself, which can effectively remove the pulse hum.

3.3 Image Analysis Algorithm Selection

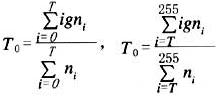

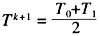

After filtering to remove on-site humming interference, the next step is to separate the image into meaningful regions that do not overlap each other, each region corresponding to the surface of an object. Classification is based on the spectral characteristics of the pixel, spatial characteristics, gray values, colors, and so on. This is actually an important part of the transition from image processing to image analysis, and is a general-purpose computer vision technology. The algorithm of image segmentation can be divided into two categories: gray threshold segmentation based on metric space and segmentation based on spatial region growth. For the automatic alignment system of the automatic dicing machine, the grayscale threshold segmentation method based on the metric space is more suitable. It is equivalent to binarizing the image. The threshold is typically calculated from the gray histogram of the image. We use an iterative algorithm to perform threshold calculations on bimodal histograms. The effect is satisfactory. The iterative algorithm is a method of calculating the segmentation threshold for a bimodal histogram. First determine the maximum and minimum gray values ​​Mmax and Mmin in the image, so that the initial threshold is:

According to T, the image is divided into two parts: the target and the background, and the average gray value of the two parts is obtained separately:

Where: i is the gray value, and ni is the number of pixels whose gray value is equal to i, thereby obtaining a new threshold:

If: Tk+1=Tk, the iterative process ends, otherwise it continues.

The above image preprocessing process can be well implemented using the Open CV visual function library.

The geometric feature point set is a set that can correctly reflect the position of the positioning mark. The selection of the feature has an important influence on the final template matching. The more the number of geometric feature points, the higher the matching accuracy. But the speed is relatively slow. The smaller the number, the worse the matching accuracy will be. But the speed is relatively fast. Therefore, we have tried to select the most suitable geometric feature points through many experiments, taking into account the speed and accuracy of the matching. In the context of the application of this system, it is a good choice to locate the geometric edge points of the template. In order to extract the geometric feature point set of the positioning template, the image is segmented by using an iterative algorithm, and then the geometric edge points of the positioning template are obtained by using the SUASAN edge and corner extraction algorithm.

3.4 Geometric edge corner extraction principle

SUSAN geometric edge extraction is to calculate the pixel in a given size window, get the initial response of the corner point at the center point of the window, and then find the local maximum in all initial responses to get the final geometric edge point set. The algorithm is as follows:

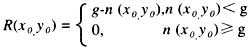

(1) The number of pixels n (x0y0) in the window whose gray value is similar to the center pixel of the window is calculated by the following two formulas:

(2) The initial response of the corner point is obtained by:

(3) Repeat (1) (2) to get the initial response of the corner points at all pixels in the image, and finally find the local maximum to get the position of the edge point set and the corner points. The geometric threshold has an effect on the output. It not only affects the number of output corners, but more importantly it also affects the shape of the output corner. For example, when the geometric threshold is reduced, the detected corner will be more sharp. The grayscale difference threshold T has little effect on the geometry of the corners of the output, but it affects the number of output corners. Because the grayscale difference threshold defines the maximum grayscale variation allowed in the window, in the scratching workpiece, the grayscale variation of the graphic template and its background image is the largest, so when the grayscale threshold is reduced, the algorithm can detect A smaller edge geometry change in the image, outputting more corner points.

Obviously, in the automatic alignment system of the dicing machine, if the geometric feature points of the template image are used as the basis, the number of feature points will be significantly reduced, the operation time will be greatly shortened, and the speed of automatic alignment can be greatly improved. .

4 Conclusion

The above algorithms are well implemented based on the Open CV visual function library. The whole image processing process is completed on a PC and implemented using VC++6.0 development tools. After continuous field experiments, we finally believe that: based on the 0pen CV visual function library, the feature points of the positioning template image obtained by SUSAN filtering, iterative segmentation and SUSAN geometric edge corner extraction algorithm are ideal, which not only retains the graphics comprehensively. The contour feature also greatly reduces the number of feature points and can effectively improve the accuracy and speed of the image matching automatic alignment of the dicing machine.

Analysis of design principle of scriber visual recognition system