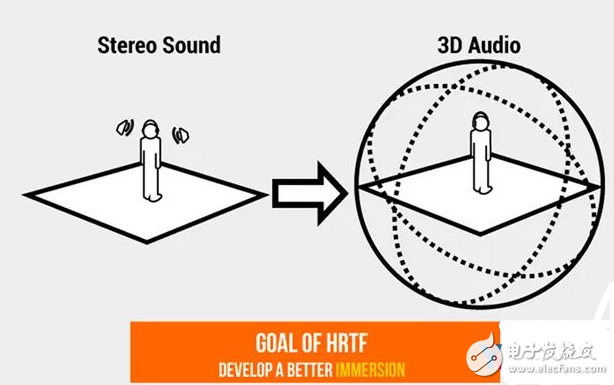

In VR technology, audio will be a better entry point, bringing a more accurate positioning for a VR. This means that realistic audio is an important prerequisite for establishing a sense of presence in virtual reality. For the listener in the virtual world, the sound position is accurate and can't create a sense of presence. The reason that traditional methods are not sufficient to create a sense of presence is that real-world acoustics are more complex than the range of such approximate estimates. Why audio is important to VR, and how AMD solves this problem. For audio processing in virtual reality, we need a new way of thinking. In the years of development of games and videos, the fidelity standard for audio rendering is relatively low, especially when compared to simultaneous graphics and movie video rendering. Although hearing is inherently stereoscopic, flat-screen games and movie/video audio are often minimized using 3D and other advanced audio rendering techniques, as all graphics and video are presented to you. When you are facing a 2D screen, if you hear the sound coming from behind and turn around, all you see is a speaker or the wall of the apartment. In addition to some FPS games, if the audio of a tablet or movie is too realistic, it can sometimes distract the player, especially if it does not match the visual experience. For example, cinema surround sounds almost universally use rear and side speakers to achieve an environmental fill effect, but almost never use important auditory cues because doing so distracts the viewer. But now the headline has changed everything. The user can turn in any direction and see a continuous visual scene. In addition, as technology advances, users can already walk independently in the virtual world. The advanced virtual reality system is expected to provide users with a sense of presence close to the consensus reality. Studies have shown that realistic audio is an important prerequisite for establishing a sense of presence in virtual reality. What is the “secret recipe†for realistic audio? In general, precise spatial and positional audio rendering by Head Related Transfer Function (HRTF) is sufficient to produce realistic audio. If the user is fixed in a position or a scene placed on the "Magic Carpet Journey", the audio designer includes all environmental effects such as reverb, occlusion, reflection, diffraction, absorption and diffusion in each preset sound. This may be the correct statement because the HRTF is responsible for locating each sound. But once the user starts moving freely in the scene (even within a valid area), the process becomes insufficient. When the user is moving or changing the center of the head, the reflection path and environmental effects of each sound are constantly changing. It is no longer practical to pre-bake the environmental effects of each sound in the scene. The typical quick approximation used is to combine all of these effects into one reverb plug-in, then use a reverb setting for the entire scene in a particular situation, or assign multiple settings to different rooms in the scene. The industry has been using technology that provides this rendering capability since the 1990s. For listeners in the virtual world, even if the position of the sound is very accurate, this approximation cannot create a sense of presence. As an example, a VR user moves along the corridor. There is a room with an open door at the left front of the corridor. The user can hear the sound coming from the room. After the user walks through the door, the sound is still there. But in the real world, users will hear continuous changes in the sound environment effect: The obstruction of the walls of the room; the diffraction at the door; the reflection of the walls, the surface of the floor and the ceiling; the material that constitutes the interior wall/floor/ceiling surface of the building and the diffusion and absorption of its objects or furniture. Traditional audio design and rendering methods for extracting room reverberation in the studio, by adding simple attenuation and low-pass filtering to the sound source, and using HRTF for positioning, can produce reliable sound presentation, but can not create a sense of presence. Even if the audio designer strives to achieve distance attenuation and sound source filtering using realistic curves, and the HRTF position is constantly updated as the listener's ear or sound source changes position, the same is true. The reason that traditional methods are not enough to create a sense of presence is that the acoustics of the real world are more complex than the scope of this approximate estimation, and through a large amount of contact and adaptation, the human brain has been trained to recognize the real world's acoustics and accurately distinguish them. they. Human hearing is a key survival evolution, because sound is often the first signal of danger, and the ability to judge the direction and distance of sound from a noisy environment is a key survival skill. An example of this ability is the so-called "cocktail effect", which is a human hearing ability. When humans focus on a person's conversation, they can ignore other conversations or noises in the background. If the ambient sound rendering is closer to the real world of acoustics, we need to model the physics model of the propagating sound, which is called auralizaTIon. The industry has proposed and implemented a variety of methods for acoustic propagation modeling, and they have made various balances between complexity and accuracy. The real-time computing power of the current VR system still cannot meet the perfect modeling (solving the acoustic equation for each sound propagation event), but with the real-time GPU computing function brought by AMD TrueAudio Next, we can greatly improve the CPU alone. The ability to be audible. One method that can significantly enhance the realistic audibility of audio occlusion and audio reflection in critical frequency bands is: geometric acoustics. The starting point of geometrical acoustics is the ray tracing path (usually the subset of samples) between each sound source and the listener's ear position, and a set of algorithms is applied to the data set of the tracking path and the path bounce. Material properties to generate a unique impulse response for each sound, each ear. In addition to path reflection, diffusion, and occlusion, diffraction effects (such as finite edge diffraction) and HRTF filters can be modeled within this frame and superimposed on each time-varying impulse response. During the rendering process, the continuously updated impulse response as the source and listener change position will be convolved with the corresponding audio source signal. These signals are then mixed separately for each ear to produce an audible output audio waveform. This method is extensible and has been implemented on the CPU via AMD TrueAudio Next. TrueAudio Next significantly enhances the number of physically modeled sound sources that can be supported. Applications can extend the ambient sound source by "borrowing" a small subset of GPU computing units (approximately 10%-15%), rather than being limited to a small portion of the primary voice prompts. When deploying multiple GPUs or a combination of APUs and GPUs, quality can scale in a larger dimension. The two main algorithms required for geometric acoustic rendering are time-varying convolution (located in the audio processing component) and ray tracing (located in the propagation component). For AMD's Radeon GPUs, AMD's open source FireRays library accelerates ray tracing; AMD's TrueAudio Next library accelerates time-varying real-time convolution. The AMD TrueAudio Next library is a high-performance, OpenCL-based real-time math audio acceleration library with a special focus on GPU computing acceleration. In addition to low latency and time-varying convolution, the TrueAudio Next library also supports efficient FFT and Fast Hartley Transforms (FHT). TrueAudio Next supports x86 CPUs and AMD Radeon GPUs. The TrueAudio Next library has been described above as a key solution, but we still have to answer two important questions: Can GPU compute shaders support this technique without causing interference with graphics rendering and causing jitter and/or key frame rate loss? Is high-performance GPU audio really capable of noise-free, low-latency rendering in VR games or advanced movie rendering scenarios? While the traditional point of view tells us that audio rendering on the GPU can cause unacceptable delays and interfere with graphics performance, the answer to both of these questions is yes, and this points to another important pillar of AMD TrueAudio Next: Compute Unit ReservaTIon based on asynchronous calculation. As a key component of LiquidVR TIme Warp and Direct-to-GPU rendering capabilities, AMD's asynchronous computing technology is well known in the VR rendering space. Under the control of an effective hardware scheduler, asynchronous computing has a variable execution priority, allowing multiple queue functions to use different CU sets simultaneously, rather than saying that all graphics shader functions are waiting in a single queue, so that Executed in the entire array of CUs. AMD's Compute Unit ReservaTIon feature further deepens the idea that as long as the enabled application requires it, a limited set of CUs can be divided and reserved and accessed through reserved real-time queues. For example, in a GPU with 32 CUs, 4 or 8 CUs can be reserved for TrueAudio Next, and the remaining 24 to 28 CUs can be used for graphics. Reserved CUs can be executed entirely in TrueAudio Next-enabled applications, plugins or engines (not at startup), and the CU will regain freedom when the application actively releases them (CU) or exits. In addition, for a slightly lower priority kernel, an additional "medium priority" queue can be assigned to the reserved CU. In the case of time-varying convolution, for audio channels that must be low-latency, absolutely no-noise, they can use real-time queues; while less critical impulse response updates use medium-priority queues. The Compute Unit Reservation provides a range of key advantages that support the coexistence of audio and graphics: With the guidance of the plugin and the advice of the audio engine vendor, the number of reserved CUs is entirely up to the game developer. The audio engine has extensive experience in extending the available CPU resources with excellent analysis tools. AMD TrueAudio Next just adds a higher dimension: a large, reliable, configurable private sandbox. Avoid being caught off guard. The CU reservation value can be assigned in the early stages of game development. The audio design frequency and graphics design can be done independently, without worrying that the audio may inadvertently "steal" any graphics computing resources. The reserved computing unit actually provides a tighter (but larger) sandbox than a multi-core CPU running a generic OS. The graphics are independent of the audio and the audio is independent of the graphics. Only the memory bandwidth is a shared resource, and for this, the audio usage is much smaller than the graphics; the DMA transmission delay is not enough to make an impact. A no-noise convolution filter delay as low as 1.33 milliseconds (48 kHz 64 samples) can be achieved with an impulse response of more than 2 seconds, while a typical audio game engine requires a total buffer delay of 5 to 21 milliseconds. The reserved computing unit is a driver function provided to the NDA partner. In addition, the TrueAudio Next library can be used with or without a reserved computing unit. The AMD TrueAudio Next open source library and the reserved computing unit controlled by the driver will bring a higher level of audio rendering realism to virtual reality. We are very much looking forward to developers using the work they create. Note: The highest technical level is generally called Fellow, and Fellow is generally level with VP.

The 7-inch tablet can be used as the golden size of a tablet computer. It is small and portable. It can be used at home and outdoors. You can browse the web, watch videos and play games. It is a household artifact. Although the size of the 7-inch tablet is inclined to the tablet, the function is more inclined to the mobile phone, so it can also be used as a substitute for the mobile phone. Compared with other sized tablets, the 7-inch tablet has obvious advantages in appearance and weight. Both the body size and the body weight have reached a very reasonable amount.

1.In appearance, the 7 inch tablet computer looks like a large-screen mobile phone, or more like a separate LCD screen.

2.In terms of hardware configuration, the 7 inch tablet computer has all the hardware devices of a traditional computer, and has its own unique operating system, compatible with a variety of applications, and has a complete set of computer functions.

3.The 7 inch tablet computer is a miniaturized computer. Compared with traditional desktop computers, tablet computers are mobile and flexible. Compared with Laptops, tablets are smaller and more portable

4.The 7 inch tablet is a digital notebook with digital ink function. In daily use, you can use the tablet computer like an ordinary notebook, take notes anytime and anywhere, and leave your own notes in electronic texts and documents.

7 Inches Tablet Pc,Quad Core Tablet 7 Inch,7 Inch Gaming Tablet,Supersonic Tablet 7 Inch Jingjiang Gisen Technology Co.,Ltd , https://www.jsgisentec.com