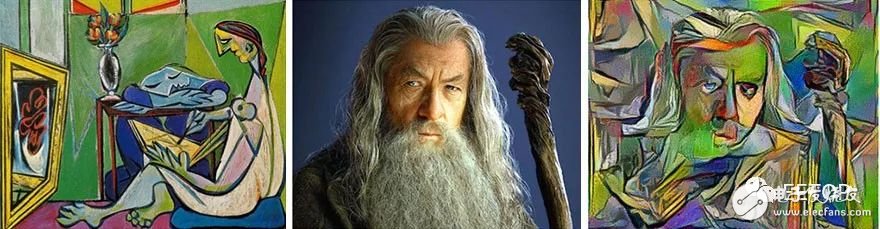

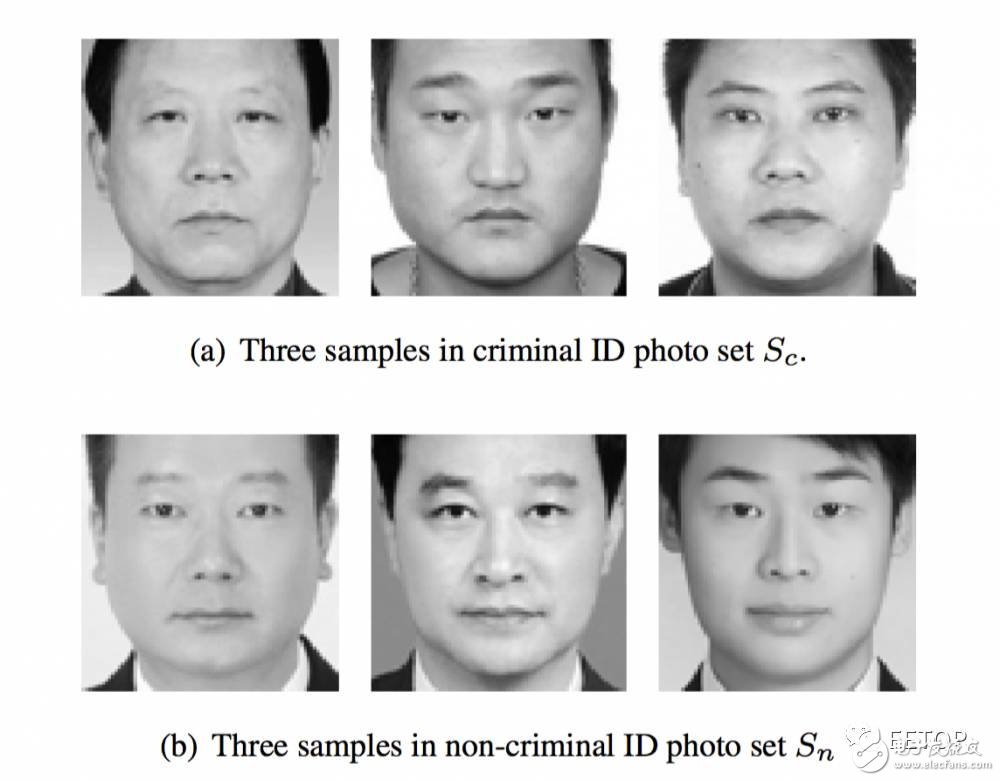

Since the 1960s, people have been expecting sci-fi-level AI like Hal (HAL), but until recently, PCs and robots were still very stupid. Now, technology giants and startups have announced the arrival of the AI ​​revolution: driverless cars, robot doctors, machine investors, and more. PricewaterhouseCoopers believes that by 2030, AI will contribute $15.7 trillion to the world economy. "AI" is a hot word in 2017. Just like ".com" is a fashionable phrase in 1999, everyone claims to be interested in AI. Don't be confused by the AI ​​hype, is it a bubble or a real one? Compared with the old AI trend, what is new about it now? AI is not applied easily or quickly. The most exciting examples of AI come from universities or technology giants. Any self-styled AI expert who promises to revolutionize the company with the latest AI technology is only passing the wrong AI information, some of them just reinventing the old technology image and packaging them into AI. Everyone has experienced the latest AI technology by using Google, Microsoft, and Amazon services. However, “deep learning†is not quickly grasped by large companies and is used to customize internal projects. Most people lack sufficient relevant digital data to be reliable enough to train AI. As a result, AI does not kill all jobs, especially because it requires humans to train and test each AI. AI is now able to "see through the eyes" and is proficient in some visually relevant tasks, such as identifying cancer or other diseases through medical imaging, which is statistically superior to human radiologists, ophthalmologists, dermatologists, etc. Also driving a car and reading a lip language. AI can draw any style of drawing through a sample of learning (such as Picasso or your paintings). In turn, it can also fill in the missing information through a painting to guess what the real photo is. AI looks at the screenshot of a web page or application and can write code to create a similar web page or application. AI can still "hear with your ears", it can not only understand you, but also listen to Beatles or your music, create new music, or simulate the voice of anyone it hears. The average person can't tell whether a painting or a song is created by a human or a machine, and it is impossible to tell whether a passage is spoken by humans or AI. The AI ​​trained to win poker games learned to bluff, be able to deal with lost cards, potential fraud, and mislead information. Robots trained to negotiate also learn to deceive, guessing when you are lying, and lying if you need them. An AI for translating between Japanese and English or between Korean and English can also be translated between Korean and Japanese. It seems that the translation AI itself creates an intermediate language that can interpret any sentence regardless of the boundaries of the language. Machine Learning (ML) is a sub-category of AI that allows machines to learn from experience and learn from real-world examples. The greater the amount of data, the more it can be learned. It is said that if the performance of a machine to complete a task is getting better with the increase of experience, then it can learn according to the experience of this task. But most AI are still made according to fixed rules, and they can't learn. From now on, I will use the term "machine learning" to refer to "AI learning from data" to emphasize the difference between it and other AI. Artificial neural networks are just one way to machine learning. Other paths include decision trees, support vector machines, and so on. Deep learning is an artificial neural network with many levels of abstraction. Don't talk about the word "depth", which has hype. Many machine learning methods are "shallow". Successful machine learning is usually mixed, that is, a combination of many methods, such as tree + deep learning + others, which are trained separately and then combined. Each method can bring different errors, so averaging the success of each of them, they outperform a single method. Old AI does not "learn". It is rule-based, it is just a few humans written "if...then...". It is called AI as long as it solves the problem, but it is not machine learning because it cannot learn from the data. Most of the current AI and automated systems are still rule-based code. Machine learning was only known since the 1960s, but like the human brain, it requires a lot of computing equipment to process large amounts of data. In the 1980s, it took several months to train an ML on a PC, and the digital data at that time was very rare. Manually entered rule-based code can quickly solve most problems, so machine learning is forgotten. But with our current hardware, you can train an ML in a matter of minutes, we know the best parameters, and the digital data is more. Then, after 2010, one after another AI field began to be controlled by machine learning. From visual, voice, language translation to game play, machine learning outperformed rule-based AI, and usually better than humans. Why did AI beat humans in the 1997 chess game, but it wasn't until 2016 that it defeated humans in the Go game? Because in 1997, the computer simply calculated all the possibilities in the chess 8x8 board, but Go has the possibility of 19x19, and it takes a billion years for the computer to calculate all the possibilities. It's like having to randomly combine all the letters to get the same whole article: it's impossible. Therefore, the only hope known is to train an ML, but ML is approximate, not deterministic, machine learning is "random", it can be used for statistical analysis, but not for accurate prediction. . Machine learning makes automation possible, as long as you are ready to train the correct data for ML. Most machine learning belongs to supervised learning. The examples used here for training are labeled. The tags are descriptions or labels for each instance. You first need to manually separate the photos of the cat from the photos about the dog, or separate the spam from the normal mail. If you mistakenly tag the data, the final ML will be incorrect, which is crucial. Putting unlabeled data into ML is unsupervised learning, where ML finds models and groups of useful data, but it can't be used alone to solve many problems. So some ML is semi-supervised. In anomaly detection, you can identify unusual things like fraud or cyber intrusion. A ML trained through old-fashioned fraud will miss out on new types of fraud. You can ask ML to warn of any suspicious differences. Government departments have begun to use ML to detect tax evasion. Reinforcement learning has been reflected in the 1983 movie "War Game", in which the computer avoids the Third World War by opening up every scene in the speed of light. This AI has been explored in millions of failures and attempts, and it has finally yielded huge returns. AlphaGo is trained like this: It has played its own opponents millions of times, gaining skills that transcend humanity. It makes a trick that has never been seen before, and human players may even see it as a wrong trick. But later, these tricks were recognized as witty. ML began to become more creative than human Go players. When people think that AI is not really intelligent, the "AI effect" has emerged. People need to believe in magic in the subconscious, and they need to believe that human beings are unique in the universe. Every time a machine surpasses humans in a smart activity, such as when playing chess, recognizing pictures or translating, people always say: "That's just powerful computing power, it's not intelligence." Many apps have AI. However, once it is widely used, it is no longer called "intelligence." If "smart" is just a skill that AI does not achieve (that is, a skill that is unique to the brain), the dictionary is updated every year. For example, mathematics was considered intellectual activity before the 1950s, and now it is no longer . This is really weird. With regard to "powerful computing power," a human brain has 100 trillion neuron connections, much more than any computer on Earth. Machine learning can't be "powerful computing." If you let the machine learn to try all the connections, it will take billions of years. Machine learning only "guess on a trained basis," and it uses less computing power than the brain uses. So, it should be AI to claim that the human brain is not smart enough to claim that the human brain is just a powerful computing power. Machine learning is not a human brain simulator, the real neurons are much different. Machine learning is another pathway to the true brain. Both brain and machine learning deal with statistics (probabilities) to approximate complex functions, and they all give slightly biased results, but the results are available. Machine learning and the human brain give different results for the same task because they deal with problems in different ways. Everyone knows that the brain is easy to forget things, and there are many limitations when solving specific math problems, but the machine is perfect in memory and math. However, the old ideas that think "the machine either gives the exact answer or the machine is broken" are wrong and outdated. Humans will make a lot of mistakes. You won't hear that this human brain is broken, but you will hear this human brain learn harder. So, ML is not broken, they need to study harder and learn in more different data. ML trained based on human prejudice may be racist, gender-discriminatory, unfair, and in short, the human brain. ML should not be trained only by data, and should not only be used to mimic human work, behavior, and the brain. The same ML, if trained in other galaxies, can imitate the alien brain, let us also think with alien thinking. AI is becoming as mysterious as humans. The idea that computers can't be creative, can't lie, make mistakes, or be like humans comes from old rule-based AIs that are truly predictable, but they change with machine learning. . Once AI has mastered some new capabilities, it is called "not smart enough." This era is over. The only really important difference for AI is: general AI, or narrow AI. Unlike other sciences, you can't verify whether an ML is using a logical theory. To determine if an ML is correct, you can only test new data results that are not visible. ML is not a black box. You can see the "if...then..." list that it generates and runs, but usually for a human, the amount of data is too large and complicated. ML is a practical science that attempts to reproduce the chaos of the real world and human intuition. It does not give a simple or theoretical explanation. It's like, you have an idea that works, but you can't explain exactly how you came up with it. For the brain, this is called inspiration, intuition, and subconsciousness, but for computers, this is machine learning. If you can get all the nerve signals a person needs to make decisions in the brain, can you understand the real reason and process of the brain making this decision? Maybe, but it is complicated. Everyone can intuitively imagine another person's face, either a realistic face or a Picasso face. People can also imagine a sound or musical style. But no one can describe a change in face, sound, or musical style in a complete and effective way. Humans can only see three dimensions, even if Einstein can't consciously solve the mathematical problems of machine learning in 500 dimensions. But our human brain has been using intuition to solve this 500-dimensional mathematical problem, just like magic. Why can't it be solved consciously? Think about it, what would happen if the brain gave a formula for thousands of variables for each idea? The extra information will make us very confused and greatly slow down the speed of our thinking. Why bother? No humans can do a few pages of mathematical calculations, and we haven't evolved something like a USB cable on our heads. Defective automation will enhance human work without killing them. If no one can predict anything, machine learning can't do the same. Many people use the market price changes for many years to train ML, but these AI still can't predict the market trend. ML only predicts if past factors and trends remain the same. But stocks and economic trends are constantly changing and are almost random. ML will fail when old data is no longer valid or if errors occur frequently. The tasks and rules that have been learned must be consistent, or at least rarely changed, so that you can train again. For example, learning to drive, play poker, draw in a certain style, predict disease based on health data, and convert between different languages ​​can be done by machine learning. Older examples will still be in the near future. Effective. Machine learning can find causal links in the data, but it can't find something that doesn't exist. For example, in a weird study called "Automatic identification of criminals using facial images," ML learned a lot of facial photos of criminals and innocents. The researchers claimed that machine learning can only be captured based on a single facial photo. The new "bad guys", but the researchers "feel", further research will dismiss the validity of judgment based on appearance. Their data settings are biased: some seemingly innocent white-collar criminals even laugh at their practices. The only connection that ML can learn is the type of mouth or collar that is happy or angry. Those who are smiling in white-collar workers are classified as innocent and honest, while those who look sad wearing black-collar clothes are associated with "bad guys." Those machine learning experts try to use people's faces to judge the quality of this person, but they can't judge through clothing (social class). Machine learning magnifies an unfair prejudice: thieves wearing cheap clothes on the street are more likely to be found and punished than corrupt politicians and top corporate fraudsters. This machine learning will find all the thieves on the street and put them in jail, but no white-collar workers. Machine learning doesn't live in our world like any other adult. They don't know what else is outside the data. They don't even know what is "obvious". For example, in a fire, the fire is more Big, the more fire trucks are sent. One ML will notice that the more firefighters in a fire, the more damage they will see the next day, so it is the fire truck that caused the fire damage. Conclusion: Machine learning will send firefighters to jail for arson, as 95% of them are relevant! In some cases, machine learning can predict things that humans cannot predict. “Deep Patient†is an ML trained by the Mount Sinai Hospital in New York with 700,000 patient data. It can be used to predict schizophrenia, and no one knows how to predict it! Only ML can do it, and humans can't do the same thing by learning machine learning. That's the problem: for an investment, medical, judicial, and military decision, you might want to know how AI came to its conclusions, but you can't know. You can't know why machine learning refused your loan, why you decided to go to jail, and why you gave a job to someone else. Is ML publicly unfair? Does it have race, gender and other prejudices? Machine learning calculations are visible, but they are difficult to make human-readable summaries. Machine learning speaks like a prophet: "You humans don't understand, even if I show you math, you don't understand, so believe me! You tested my previous predictions, they are all right!" Humans never fully explain the decisions they make. We will give reasons that sound reasonable, but they are usually incomplete and too simplistic. Those who can always get the right answer from ML will begin to make false interpretations because it makes it easier for the public to recognize ML's predictions. Still others will secretly use ML and describe the ideas they think are their own ideas. ML is limited because they lack general intelligence and prior knowledge. Even if you combine all the professional ML, or train an ML to do everything, it still can't complete the work of general intelligence. For example, in terms of understanding the language, you can't talk about all the topics with Siri, Alexa or Cortana, just like chatting with real people, they are just smart assistants. In 2011, IBM's Watson replied more quickly than the human player on the Jeopardy show, but it confuses Canada with the United States. ML can produce a practical short summary of long texts, including emotional analysis, but not as reliable as humans. Chatbots can't understand too many problems. There is currently no single AI that can do things that are easy for one person: speculate whether a customer is angry, ironic, and then adjust his tone. There is no universal AI like in movies. But we can still get some of the sci-fi AI's minutiae, which is the AI ​​that beats humans in a narrow professional field. The latest news is that narrow areas can also include creative or things that are generally considered to be human-only, such as painting, composing, creating, guessing, deceiving, and falsifying emotions, all of which do not seem to require universal AI. No one knows how to make a generic AI. This is awesome. We already have a superhuman professional worker (narrow AI), but no one terminator or hacker will decide to kill us. Unfortunately, humans train the machine to kill us in an instant. For example, a terrorist might train an autonomous truck to crash the sidewalk. An AI with universal intelligence may self-destruct and will not follow terrorist instructions. AI ethics may be invaded and reprogrammed into an illegal mode. The current AI is neither generic nor AI belonging to scientists, it always follows human instructions. AI will kill old jobs, but it will also create new machine learning trainers, just like pet trainers, unlike engineers. An ML is much harder to train than a pet, because it doesn't have universal intelligence, it learns everything it sees from the data, without any screening and common sense. A pet will think twice before learning to do bad things, such as killing its friends. For ML, however, it makes no difference to serve terrorists or serve hospitals, and it does not explain why you are doing this. ML does not apologize for its own mistakes and the horror atmosphere it creates for terrorists. It is not a general AI after all. Practical machine learning training. If you train an ML with a photo of the item, it will treat the handle as part of the item and will not be able to identify the item itself. A dog knows how to eat from a person's hand, and that stupid ML will eat the food along with your hand. To get rid of this problem, you must first train it to know the hand, then train it to know the individual items, and finally train the hand to hold the item, and label it "Hand holding item X." Copyright and intellectual property laws need to be updated. Like humans, ML can invent new things. An ML is shown with two existing A and B things, and it produces C, a completely new thing. If C and A, B are sufficiently different and different from anything else in the world, then C can obtain a patent for an invention or artwork. So who is the creator of this thing? Further, if A and B are patented or copyrighted materials? When C is very different, the creators of A and B cannot think that C was born because of the existence of A and B. We assume that training ML on the basis of existing copyrighted painting, music, architecture, design, chemical formulas, and stolen user data is not legal, so how do you identify whether a work uses the results produced by ML, especially it Not as easy to identify as Picasso's style? How can you know if it has a little machine learning? Many people secretly use machine learning and claim that the works are their own. For most jobs in a small company, training labor is much cheaper than training machine learning. It is easy to teach a human to drive, but it is long and difficult for the machine to learn to drive. Of course, it may be safer to let the machine drive than humans, especially considering those who are drunk, sleepy, looking at the phone, ignoring the speed limit or those who are crazy. But so expensive and reliable training is only possible in large companies. ML trained with cheap methods is both unreliable and dangerous, but only a few companies have the ability to train reliable AI. A trained ML can never be copied, unlike a brain experience being transmitted to another brain. Large providers will sell untrained ML for reusable tasks such as Radiologist ML. ML can complement a human expert, who is always needed, but it can replace other "excess" employees. A hospital can hire a radiologist to supervise ML without hiring a large number of radiologists. The work of radiologists will not be extinct, but there will be fewer jobs in each hospital. Companies that train ML will sell ML to multiple hospitals to earn back investment funds. Every year, the cost of training ML is reduced, as more and more people will learn how to train ML. But due to data storage and testing, reliable ML training will not eventually become very cheap. In theory, many tasks can be automated, but in reality only a part of the work is worth the cost of training an ML. For those jobs that are too unusual, such as urologists, or translating an ancient language that has been lost, the human salary is still cheaper in the long run than training an ML at a time, because doing this The number of people working is too small. Beyond ML research, humans will continue to work on general AI. IQ testing is wrong. IQ testing can't predict people's success in life, because it is a synthesis of many different intelligences, vision, language, logic, Interpersonal relationships, etc., but the results still cannot be measured by quantitative IQ numbers. We believe that insects are "stupid" compared to human IQ, but mosquitoes have always outperformed humans in a separate task of "biting and running." Every month, AI defeats humans in a narrower task area, as narrow as the skills of mosquitoes. By the time the singularity is coming, the AI ​​will defeat us humans in all things, which is ridiculous. We are meeting a lot of narrow singularities. Once AI defeats humanity in something, everyone expects those who supervise AI to give up their work. I have always read articles that believe that humans will be able to retain their unique and flawless manual work, but in fact, AI can be pretending to be defective, they will learn to make manual work in each finished product. General different defects. It is unlikely that AI will be creative, but it still lacks general intelligence. For example: comedians and politicians work safely. Although they don't need unique (narrow) studies or degrees, they can talk about anything with humor and persuasive things. If your major is a complex but narrow general task, such as if you are a radiologist, ML will be trained to replace you. You need to have universal intelligence! Instrumentation Cable Assembly

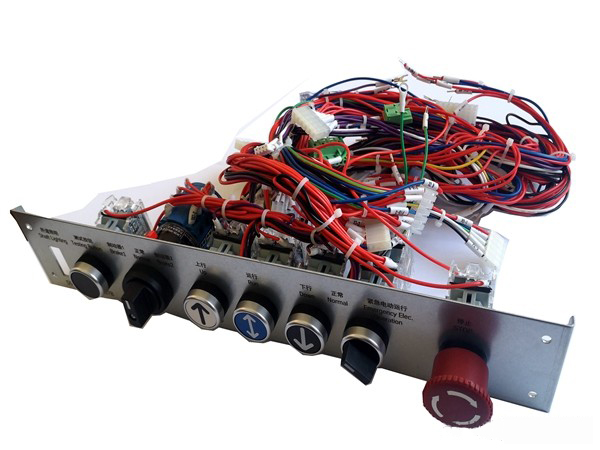

Instrumentation Cable Assemblies are widely used in various fields, covering industry, agriculture, transportation, science and technology, environmental protection, national defense, culture, education and health, people's lives, etc., and undertake the tasks of gatekeepers and guides in the operation of all walks of life in the construction of the national economy. . Due to its special status and great role, it has a huge doubling and pulling effect on the national economy, and has a good market demand and huge development potential.

Instrument, a general term for instruments that display numerical values, including pressure instruments, flow instruments, and various analytical instruments.

The main types of instruments are glass thermometers, bimetal thermometers, pressure thermometers, thermocouples, thermal resistances, non-contact thermometers, temperature controllers (regulators), temperature transmitters, temperature calibration instruments, temperature sensors, temperature testers, etc.

Kable-X focuses on the development and production of high-quality custom wiring harness. Terminals are indispensable parts in the processing of Cable Assembly. There are also many types of terminals, including disc terminals, tube terminals, Y-shaped terminals, 250 terminals, etc. Etc., as the market continues to grow and customers' quality requirements continue to increase, Kable-X' s requirements for purchasing terminals are also very strict. All terminal incoming materials must meet the requirements described in the corresponding specifications.

In addition to Instrumentation Cable Assembly, we also have Vehicle cable assembly and Industrial Cable Assembly.

Instrumentation Cable Assembly,Instrument Wiring Harness,Instrument Cable Assembly,Instrument Wiring Harnesses Kable-X Technology (Suzhou) Co., Ltd , https://www.kable-x-tech.com

(Machine learning can't look for correlations from things that don't exist, such as faces with a criminal tendency. But the data is biased: there are no white-collar criminals smiling! Machine learning will learn these prejudices.)

(Machine learning can't look for correlations from things that don't exist, such as faces with a criminal tendency. But the data is biased: there are no white-collar criminals smiling! Machine learning will learn these prejudices.)