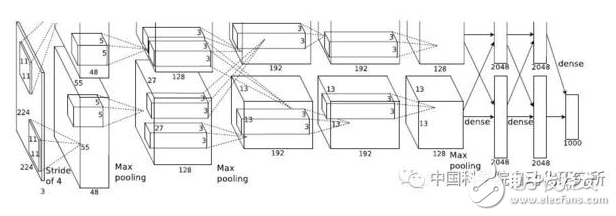

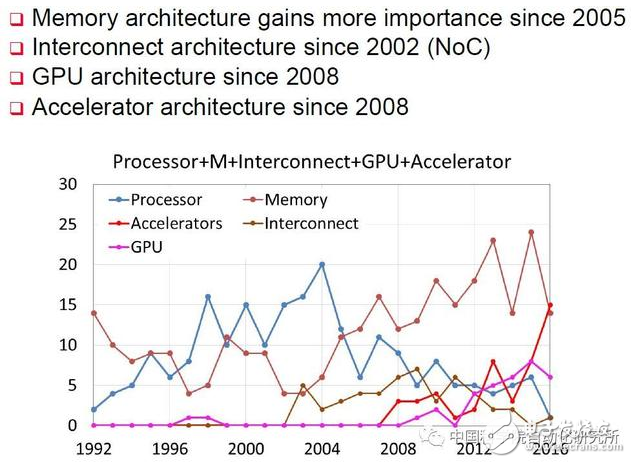

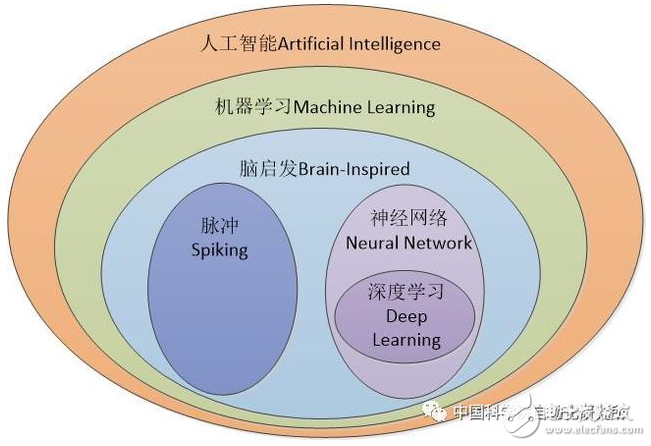

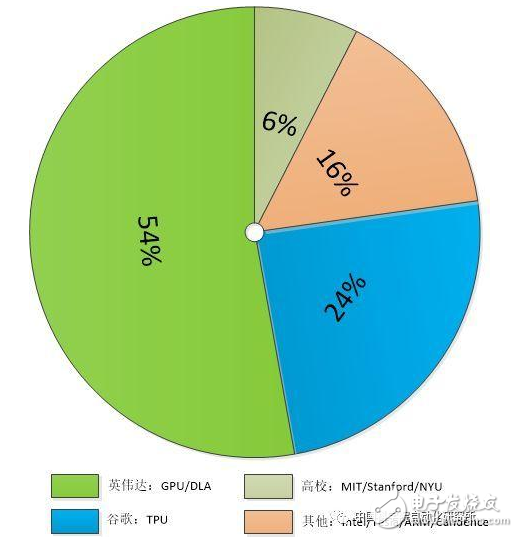

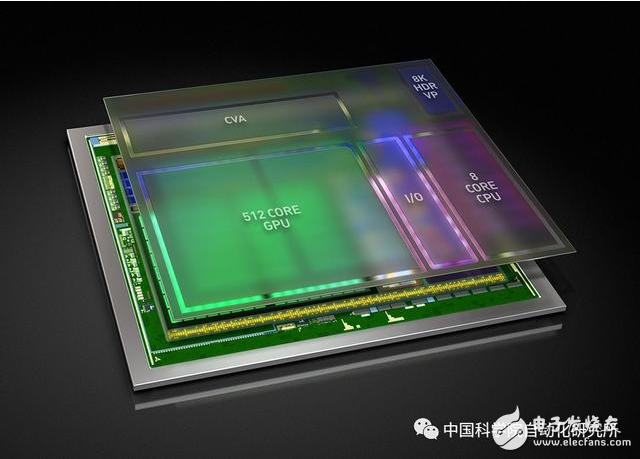

This paper analyzes the pattern and characteristics of AI chips at home and abroad. The author believes that in the field of AI chips, foreign chip giants account for most of the market share, and they have absolute leading edge in terms of talent accumulation and company merger. Domestic AI start-ups are in a state of chaos and dominance. In particular, each start-up company’s AI chip has its own unique architecture and software development kit. It cannot integrate into the ecosystem established by Nvidia and Google, nor does it have The strength to compete with If we say that the man-machine war between AlphaGo and Li Shishi in March 2016 only had a big impact on the tech community and the chess industry, then in May 2017, it would compete with the world's number one world chess champion Ke Jie. Technology has been pushed into public view. AlphaGo is the first artificial intelligence program to defeat human professional Go players and the first to defeat the world champion of Go. It was developed by a team led by Google’s DeepMind company, Dames Hasabis. The principle is "deep learning." How do domestic AI chips compete with each other? In fact, as early as 2012, deep learning technology has caused extensive discussion in the academic community. In this year's ImageNet Large-scale Visual Identity Challenge ILSVRC, AlexNet, a neural network architecture with 5 convolutional layers and 3 fully connected layers, achieved the best historical error rate of top-5 (15.3%). The second place scored only 26.2%. Since then, there have emerged more neural network structures with more layers and more complex structures, such as ResNet, GoogleNet, VGGNet, and MaskRCNN, as well as the generative countermeasure network GAN that was developed last year. How do domestic AI chips compete with each other? Whether it is winning AlexNet for the visual recognition challenge, or defeating Keqi’s AlphaGo, their realization cannot be separated from the core of modern information technology—processors, whether they are traditional CPUs or GPUs, or emerging. The dedicated acceleration unit NNPU (NNPU is short for Neural Network Processing Unit). A small seminar on architecture 2030 was held at the international top conference on computer architecture ISCA 2016. Prof. Xie Yuan from UCSB, a Hall of Fame member, summarized the papers that were included in ISCA since 1991, and the relevant accelerometer related articles were included. It began in 2008 and peaked in 2016, exceeding the three traditional areas of processor, memory, and interconnect architecture. In this year, the "Neural Network Instruction Set" paper submitted by the research group of Chen Yunyi and Chen Tianshi from the Institute of Computing Technology, Chinese Academy of Sciences, was the highest scored paper for ISCA2016. How do domestic AI chips compete with each other? Before specifically introducing the AI ​​chip at home and abroad, I would like to see some readers here may be wondering: Isn't it all about neural networks and deep learning? Then I think it is necessary to elaborate on the concepts of artificial intelligence and neural networks. In particular, in the “Three-year Action Plan for Promoting the Next-Generation Artificial Intelligence Industry Development (2018-2020)†released by the Ministry of Industry and Information Technology in 2017, the description of the development target makes it easy to think that artificial intelligence is a neural network, and the AI ​​chip is a neural network. chip. The overall core basic capabilities of artificial intelligence have been significantly enhanced, smart sensor technology products have achieved breakthroughs, and design, foundry, and packaging and testing technologies have reached international standards. The neural network chip has been mass-produced and has achieved large-scale application in key areas. The open source development platform has initially supported the industry. Rapidly developing capabilities. In fact, it is not. Artificial intelligence is a very old and old concept, and neural networks are only a subset of the artificial intelligence category. As early as 1956, John McCarthy, the Turing Award winner known as the "Father of Artificial Intelligence," defined artificial intelligence in such a way as to create the science and engineering of intelligent machines. In 1959, Arthur Samuel gave a definition of machine learning in a subfield of artificial intelligence, that is, "computers have the ability to learn, rather than through pre-accurately implemented code," which is now recognized as the earliest and most accurate machine learning. Definition. The neural networks and deep learning that we all know daily belong to the category of machine learning and are all inspired by the brain mechanism. Another important area of ​​research is the pulsed neural network. Domestically represented units and enterprises are the Brain Computing Research Center of Tsinghua University and Shanghai Xijing Technology. How do domestic AI chips compete with each other? Well, we can finally introduce the development status of AI chips at home and abroad. Of course, these are my personal observations and my humble views. Foreign: Technological oligarchs have obvious advantages Thanks to its unique technology and application advantages, Nvidia and Google accounted for almost 80% of the market for artificial intelligence processing. After Google announced its Cloud TPU open service and Nvidia launched Xavier, it occupied a share of the market. In 2018, it is expected to further expand. Other vendors, such as Intel, Tesla, ARM, IBM, and Cadence, also have a place in the field of artificial intelligence processors. How do domestic AI chips compete with each other? Of course, the focus areas of these companies are not the same. For example, Nvidia mainly focuses on the GPU and unmanned areas, while Google focuses on the cloud market, Intel focuses on computer vision, and Cadence provides acceleration-related neural network computing-related IP. If the aforementioned companies are mainly biased toward hardware areas such as processor design, then ARM is mainly software-oriented and dedicated to providing efficient algorithms for machine learning and artificial intelligence. Note: The above table gives the most up-to-date data available for each development unit by 2017. Take the lead - Nvidia In the area of ​​artificial intelligence, Nvidia can be said to be the company with the most extensive coverage and largest market share. Its product lines are found in many areas such as self-driving cars, high-performance computing, robotics, healthcare, cloud computing, and game videos. Its new artificial intelligence supercomputer Xavier for the field of self-driving cars, in the words of NVIDIA CEO Jen-Hsun Huang, is “This is an amazing attempt in the area of ​​SoC that I know. We have been working on developing chips for a long time.†How do domestic AI chips compete with each other? Xavier is a complete system-on-chip (SoC) that integrates a new GPU architecture called Volta, a custom 8-core CPU architecture, and a new computer vision accelerator. The processor offers high performance of 20 TOPS (trillion operations per second) while consuming only 20 watts. A single Xavier AI processor with 7 billion transistors, manufactured using cutting-edge 16nm FinFET processing technology, can replace the DRIVE PX 2 currently equipped with two mobile SoCs and two independent GPUs while power consumption is just one Small part. At CES in Las Vegas in 2018, NVIDIA introduced three Xavier-based artificial intelligence processors, including a product that focuses on augmented reality (AR) technology for automotive applications, and a further simplified vehicle. DRIVE IX, built and deployed by the artificial intelligence assistants, and a modification of its own proprietary taxi brain, Pegasus, further expand their advantages. University graduates - Google If you only know about artificial intelligence products such as Google's AlphaGo, driverless and TPU, then you should also know the technology behind these products: Google legend chip engineer Jeff Dean, Google cloud computing team chief scientist, Stanford Li Feifei, Director of the University's AI Lab, John Hennessy, Chairman of Alphabet, and David Patterson, Google's Distinguished Engineer. Today, Moore's Law has met with both technical and economic bottlenecks, and processor performance has been growing at a slower pace. However, the demand for computing power has not slowed down in society, even in mobile applications, big data, and labor. With the rise of new applications such as intelligence, new requirements have been put forward for computational power, computational power consumption, and computational cost. Unlike traditional software programming models that rely entirely on general-purpose CPUs and their programming models, the overall system of heterogeneous computing includes a variety of domain-specific architecture (DSA)-designed processing units, each with a DSA processing unit. Responsible for the unique field and optimization for this area, when the computer system encounters the relevant calculations by the corresponding DSA processor to be responsible. Google is the practitioner of heterogeneous computing. TPU is a good example of heterogeneous computing in artificial intelligence applications. The second-generation TPU chip released in 2017 not only deepened the ability of artificial intelligence in learning and reasoning, but also Google seriously to market it. According to Google's internal tests, the second-generation chips can save half the training time for machine learning than the GPU on the market. The second-generation TPU includes four chips and can handle 180 trillion times per second. Point operations; if you combine 64 TPUs together and upgrade to so-called TPU Pods, you can provide approximately 11,500 trillion floating-point operations. Computer disruptors in the field of computer vision - Intel Intel, as the world's largest computer chip maker, has been seeking a market other than computers in recent years, and the battle for artificial intelligence chips has become one of Intel’s core strategies. In order to strengthen its strength in the field of artificial intelligence chips, not only the $ 16.7 billion acquisition of FPGA manufacturer Altera, but also the $ 15.3 billion acquisition of the automatic driving technology company Mobileye, and machine vision company Movidius and companies that provide security tools for self-driving car chips Yogitech highlights the positive transformation of this giant at the heart of the PC era towards the future. Myriad X is the Vision Processing Unit (VPU) introduced by Movidius, an Intel subsidiary, in 2017. It is a low-power system-on-chip (SoC) for accelerating deep learning and manual work on vision-based devices. Smart - such as drones, smart cameras and VR / AR helmets. Myriad X is the world's first system-on-a-chip (SoC) equipped with a dedicated neural network computing engine to accelerate deep learning inference calculations on the device side. The neural network computing engine is an on-chip integrated hardware module designed for high-speed, low-power and deep-learning-based neural networks without sacrificing accuracy, allowing devices to see, understand, and respond to the surrounding environment in real time. With the introduction of this neural computing engine, the Myriad X architecture provides 1TOPS computational performance for deep learning based neural network reasoning. 3000W Digital Tv Transmitter,3Kw Analog Tv Transmitter,3Kw Vhf Tv Transmitter,Single Channel Tv Transmitter Anshan Yuexing Technology Electronics Co., LTD , https://www.yxhtfmtv.com